Cerebras Systems has secured $1 billion in new funding, propelling its valuation to a remarkable $23 billion, spotlighting its growing influence in AI hardware. This milestone underscores the market’s eager reception of alternatives to established GPU giants like Nvidia. Cerebras’ unique wafer-scale chip technology presents a breakthrough in AI processing power and efficiency.

As demand for advanced AI accelerators rises sharply, Cerebras is poised to challenge the dominance of traditional GPU suppliers with its innovative approach. By expanding production and infrastructure, the company aims to meet surging needs for faster, more scalable AI computing solutions worldwide. This funding round marks a critical step in reshaping AI chip competition.

Investors recognize Cerebras as a formidable player capable of addressing major AI workload demands through its revolutionary chip design. Its emergence signals a shift toward diversified AI hardware ecosystems, reducing reliance on single vendors and fostering greater innovation and resilience in the fast-evolving AI market landscape.

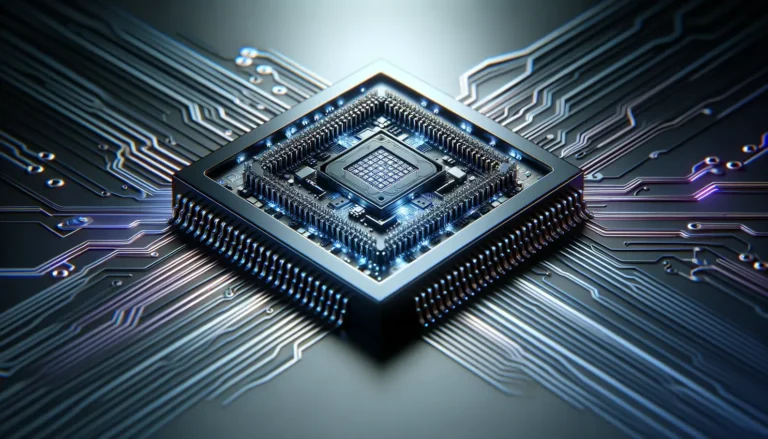

Background of Cerebras Systems and Its Technology

Cerebras Systems, founded in 2015 by ex-SeaMicro engineers, focuses on revolutionizing AI computing with its unique wafer-scale processors. The company specializes in large silicon wafers turned into single chips designed for AI workloads, offering a major leap beyond traditional GPU capabilities. Its technology targets faster AI training and inference, optimizing power use and parallel processing at unprecedented scales.

Their Wafer Scale Engine (WSE) chips, especially the latest WSE-3, measure roughly the size of a dinner plate and pack about 900,000 AI-optimized cores. This massively outperforms typical GPU chips, which are much smaller and less densely integrated. Cerebras’ innovation centers on delivering significant speed advantages critical for handling large and complex AI models.

By creating a monolithic chip using an entire silicon wafer, Cerebras achieves impressive parallelism and power efficiency, setting a new standard in AI infrastructure technology. This approach contrasts with traditional multi-chip GPU setups, which face latency and communication challenges.

Overview of wafer-scale AI chips versus traditional GPUs

Wafer-scale AI chips integrate hundreds of thousands of cores on a single silicon piece, vastly reducing communication overhead between processing units. This design improves overall performance and energy efficiency in AI model training and inference compared to discrete GPUs. Traditional GPUs use multiple smaller chips networked together, which adds complexity and latency.

Cerebras’ wafer-scale processors are engineered specifically for AI workloads, combining a custom architecture with a monolithic design. This allows greater speed and power efficiency improvements over traditional GPU solutions from companies like Nvidia. This new paradigm challenges the status quo in AI hardware.

The key advantage lies in reduced inter-core communication delays and infinite scalability potential within the wafer, enabling Cerebras chips to train massive AI models much faster and with less power consumption than conventional GPUs. This positions wafer-scale chips as a transformative alternative in AI computing.

Cerebras’ previous milestones and partnerships

Before its recent funding, Cerebras made significant strides starting with the 2019 launch of WSE-1 and the CS-1 system, achieving a valuation above $2 billion after raising $272 million. The 2021 WSE-2 release featured 850,000 cores capable of supporting networks with over 120 trillion connections.

The company forged major partnerships with AI leaders like OpenAI, signing a $10 billion deal in early 2026, and supported deployments powering Meta’s Llama API, Perplexity, and Hugging Face. Collaborations with national labs yielded performance improvements up to 500 times over traditional supercomputers.

International partnerships, such as with UAE-based G42 for multi-exascale supercomputing projects, further established Cerebras’ commercial presence. These achievements have affirmed its position as a key player challenging the GPU-dominated AI chip market.

Details of the $1 Billion Funding Round

Cerebras Systems recently closed a $1 billion funding round, boosting its valuation to an impressive $23 billion. This substantial capital raise highlights strong investor confidence in its technology. The round marks one of the largest in the AI chip sector, signaling growing interest beyond traditional GPU suppliers.

The fresh funds will accelerate Cerebras’ ability to scale wafer-scale AI chip production and expand infrastructure globally. The company aims to meet soaring demand as AI workloads grow more complex and widespread. This financial boost positions Cerebras for significant market expansion in the coming years.

Investor enthusiasm also underscores the perceived need for alternatives to GPU-dominated markets. Cerebras’ unique wafer-scale approach offers distinct advantages, making it a compelling contender alongside industry giants like Nvidia and AMD.

Breakdown of funding amount, valuation, and lead investors

The $1 billion funding was led by top-tier venture capital firms and strategic industry partners. Exact names remain partially confidential, but participation included firms known for supporting cutting-edge AI and semiconductor startups.

Following this round, Cerebras’ valuation surged to $23 billion, reflecting a more than tenfold increase since its last major raise. This valuation ranks it among the most valuable AI chip companies currently.

The significant capital injection stands as a strong vote of confidence in Cerebras’ wafer-scale chip technology, its road map, and its potential to reshape AI hardware markets for data centers and enterprises worldwide.

Use of funds for scaling AI chip production and infrastructure

Funds will primarily boost scaling of wafer-scale chip production capabilities, enabling larger batch manufacturing and improved yield rates. Expanding fabrication partnerships is a key component to handle growing shipment demands.

Cerebras will also invest heavily in enhancing global infrastructure, including data center integrations and software ecosystems to support seamless deployment of its AI processors at scale.

The capital supports research and development efforts aimed at further improving chip performance and efficiency, reinforcing Cerebras’ lead as a next-generation AI hardware provider challenging traditional GPU paradigms.

Market Analysis of AI Chip Competition

The AI chip market is becoming fiercely competitive, driven by skyrocketing demand for powerful processors to handle complex AI workloads. This surge pressures existing suppliers and opens space for disruptive new players. As AI adoption expands, finding alternatives to dominant GPU makers is critical to avoid supply bottlenecks and pricing pressures.

Industry leaders like Nvidia currently control the majority share, yet the growing workload diversity demands innovative chip designs tailored for specific AI tasks. Solutions enhancing speed, energy efficiency, and scale will gain preference amid increasing customer requirements in cloud and enterprise data centers. Market dynamics favor those offering distinct technological advantages.

Given the escalating complexity of AI models and expanded usage scenarios such as generative AI and large-scale language models, the market demands chips that can scale seamlessly. This creates fertile ground for entrants like Cerebras to challenge incumbents by addressing critical gaps in performance and cost-efficiency.

Supply crunch and demand for Nvidia alternatives

Global chip shortages have intensified supply constraints for Nvidia GPUs, triggering heightened interest in viable alternatives. Enterprises and cloud providers seek diversified suppliers to mitigate risks from single-vendor dependence amid rising AI demand. Cerebras emerges as a strong contender addressing this gap with wafer-scale innovations.

Supply chain issues limit rapid Nvidia GPU provisioning, prompting customers to evaluate new architectures that promise both performance gains and supply stability. This dynamic fuels investment in emerging chipmakers developing fundamentally different designs optimized for AI at scale.

The ongoing shortage and high costs associated with Nvidia solutions underscore the urgent market need for alternatives that can reliably supply next-generation AI workloads. Cerebras’ recent funding round signals recognition of this growing demand and the necessity for diversified chip ecosystems.

Market landscape including Nvidia, AMD, and other rivals

Nvidia remains the dominant AI chip provider with a broad ecosystem but faces mounting competition from AMD and specialized startups focusing on tailored AI accelerators. Each brings unique strengths, for instance, AMD leverages its GPU lineage while startups like Cerebras innovate with wafer-scale designs. This diversity enriches available options for AI hardware buyers.

Other competitors include Google with its TPU line and startups pushing custom AI silicon tailored for specific applications. The combined landscape is evolving rapidly, fragmenting traditional GPU hegemony and encouraging technology differentiation, especially in efficiency, speed, and scalability.

As enterprises prioritize hardware flexibility and performance improvements, the competitive dynamics will drive accelerated innovation. Cerebras’ approach, scoring big on chip scale and core count, constitutes a compelling alternative, challenging incumbents and reshaping market expectations.

Expert Insights and Future Implications

Experts highlight Cerebras’ funding as a signal of growing industry demand for diverse AI chip architectures beyond GPUs. This trend is expected to reshape AI hardware strategy. Analysts see this as a pivotal moment for market competition, encouraging innovation and reducing reliance on a single vendor. The chip’s scale and efficiency improvements position Cerebras to drive significant shifts in AI workload management.

Increasing model complexity and AI adoption across industries are making innovation in chip design crucial. Cerebras’ wafer-scale approach could accelerate breakthroughs in performance and scalability, influencing future AI infrastructure choices globally. The company’s success may inspire others to explore large-scale monolithic designs tailored for specialized AI tasks.

Overall, the industry anticipates a more balanced ecosystem emerging, where multiple chip providers coexist and compete. This diversification is viewed positively, potentially leading to cost reductions and technological advancements, which benefit AI developers, enterprises, and end users alike.

Analyst opinions on diversification and reduced single-vendor risk

Analysts stress the importance of diversification to mitigate the risks tied to dependence on one dominant vendor like Nvidia. Cerebras’ rise offers a strategic alternative that can lessen supply chain vulnerabilities and pricing pressures. Such diversification strengthens the AI hardware ecosystem and promotes resilience against market disruptions.

The $1 billion raise signals confidence in Cerebras’ ability to scale and compete, encouraging other players to intensify their innovation efforts. This competitive pressure drives vendors to enhance performance and efficiency, benefiting customers with more choices and reducing the risks of single-source reliance. The market trend favors a multi-vendor approach.

Experts also note that as AI models expand in size and complexity, different architectures will be needed. Multiple suppliers with diverse designs can better meet these challenges, ensuring a robust and flexible AI hardware landscape that supports various workload demands and enterprise needs.

Impact on data centers and potential market shifts

Cerebras’ technology promises major benefits for data centers, including faster AI training times and lower power consumption. These improvements can reduce operational costs and enable more complex AI applications, accelerating innovation across sectors reliant on large-scale AI models. The impact on data center infrastructure could be profound.

The growing presence of wafer-scale AI chips may prompt data centers to redesign hardware ecosystems, integrating these new processors alongside traditional GPUs. This hybrid approach could optimize workloads and improve scalability, making data centers more adaptable to evolving AI demands. Market dynamics are shifting toward such flexible architectures.

Potential market shifts include a redistribution of AI hardware spending toward emerging chipmakers like Cerebras. As enterprises seek performance gains and supply stability, demand may increasingly favor diverse chip solutions. This evolution challenges the current dominance and may lead to a more fragmented yet innovative AI hardware market landscape.