Core Sensors in Autonomous Vehicles

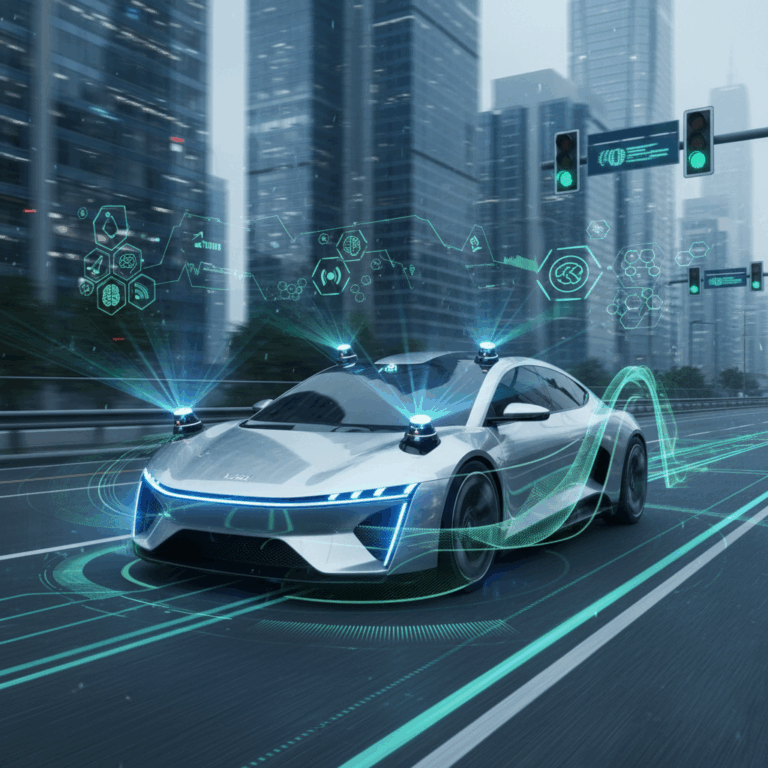

Autonomous vehicles depend heavily on a range of advanced sensors to perceive their environment accurately. These sensors work together to provide a comprehensive understanding of surroundings.

Key sensors include radar, lidar, cameras, and ultrasonic systems. Each offers unique data that helps the vehicle navigate safely without human input.

Integrating these sensor technologies ensures reliable detection of objects, road conditions, and traffic elements vital for autonomous driving.

Radar and Lidar Technologies

Radar uses radio waves to detect the position and speed of nearby vehicles. It excels in poor weather and works at long ranges, providing critical data for collision avoidance.

Lidar employs laser light pulses to generate precise 3D maps of the environment. This detailed spatial data helps in identifying obstacles and road edges with high accuracy.

Together, radar and lidar create complementary vision for autonomous cars, ensuring robust perception under diverse conditions.

Cameras and Ultrasonic Sensors

Cameras capture visual information such as traffic signals, lane markings, and pedestrians. They play a crucial role in object recognition and scene understanding for decision-making.

Ultrasonic sensors provide close-range detection useful for maneuvers like parking. Their short detection range helps detect nearby obstacles that radar or lidar might miss.

By combining cameras with ultrasonic sensors, autonomous vehicles achieve detailed awareness both at a distance and in tight spaces.

Data Processing and Artificial Intelligence

Autonomous vehicles rely on powerful onboard computers to process massive streams of sensor data in real time. These systems execute complex algorithms that interpret the environment swiftly.

The seamless integration of data processing and artificial intelligence allows cars to assess their surroundings and make instantaneous decisions necessary for safe driving.

AI-powered systems translate raw sensor inputs into actionable commands, ensuring the vehicle reacts effectively to dynamic road situations without human intervention.

Onboard Computers and Algorithms

Onboard computers serve as the central brain of autonomous vehicles. They run sophisticated algorithms that fuse sensor data and generate a coherent picture of the vehicle’s environment.

These algorithms handle tasks such as object detection, path planning, and sensor fusion, enabling the system to respond quickly and accurately to changing scenarios.

Their real-time processing capability is critical, as delays could compromise safety when navigating complex traffic environments.

Machine Learning and Object Recognition

Machine learning models are trained on extensive datasets to recognize objects like pedestrians, cyclists, vehicles, and road signs with high precision. This ability is central to safe navigation.

Deep learning neural networks analyze camera and sensor inputs to classify objects, predict potential hazards, and interpret the driving context continuously during trips.

Through continual training, these systems improve over time, adapting to new environments and rare situations often unseen in predefined rule-based programming.

Behavior Prediction and Decision Making

Autonomous vehicles use AI to predict the behavior of surrounding road users by analyzing movement patterns and traffic rules. This foresight allows proactive safety measures.

Decision-making algorithms evaluate these predictions along with route plans to determine optimal driving actions such as changes in speed, lane position, or yielding to others.

Enhancing Safety with Predictive AI

By anticipating other vehicles’ or pedestrians’ actions, autonomous cars can avoid accidents before they happen, significantly enhancing road safety and passenger comfort.

Navigation and Mapping Systems

Navigation and mapping are essential for autonomous vehicles to understand their location precisely and plan routes effectively. GPS and mapping data help the vehicle operate smoothly.

These systems integrate real-time environmental data with preloaded maps to guide the vehicle on safe, efficient paths. They adjust routes dynamically based on traffic and road conditions.

GPS and Route Planning

GPS technology provides accurate positioning by communicating with satellites, allowing the vehicle to know its exact location on the globe. This is vital for navigation.

Advanced route planning algorithms use GPS data combined with digital maps to chart optimal paths, considering traffic, road restrictions, and destination preferences.

These systems continuously update routes as new information such as obstacles or changes in traffic conditions arises, ensuring timely and safe arrivals.

Software Integration and Actuator Control

Software integration unifies sensor inputs, navigation data, and vehicle control commands to operate the car seamlessly. This coordination is critical for autonomous driving.

The vehicle’s actuators, including steering, throttle, and brakes, receive precise instructions from the control software, enabling smooth and accurate maneuvering.

Effective integration ensures the vehicle can respond instantly to sensor data and navigation updates, maintaining safety and comfort during all driving conditions.

Current Status and Future Outlook

Autonomous vehicle technology has reached advanced stages with multiple levels of automation being tested around the world. These levels range from basic driver assistance to fully driverless systems.

Extensive testing is ongoing to ensure safety and reliability before public deployment. Real-world and simulated environments help refine the technology in diverse driving conditions.

Regulatory frameworks and safety standards are evolving to support gradual integration of autonomous vehicles into everyday traffic.

Levels of Automation and Testing

Automation in vehicles is classified from Level 0 (no automation) to Level 5 (full automation). Most current autonomous systems operate at Level 2 or Level 3, providing partial or conditional automation.

Testing involves controlled pilot programs, closed tracks, and limited public road trials. These efforts gather data to improve AI decision-making and sensor reliability under real conditions.

Challenges such as complex urban environments and unpredictable human behavior require continuous innovations in sensors and algorithms to progress toward full autonomy.

Technological Advancements and Industry Trends

Recent breakthroughs in AI, sensor fusion, and hardware miniaturization are accelerating autonomous vehicle development. Companies invest heavily in smart sensors and machine learning models.

Industry trends focus on enhanced connectivity, vehicle-to-everything (V2X) communication, and cloud-based processing to improve safety and traffic efficiency.

Impact of Industry Collaboration

Collaboration between automakers, tech firms, and governments is crucial. Shared data and open standards foster faster progress and greater trust in autonomous technology.

These partnerships aim to deploy autonomous vehicles that reduce accidents, lower emissions, and transform urban mobility sustainably in the near future.