Cerebras Systems has attracted major attention by raising $1 billion, valuing the company at $23 billion, spotlighting its cutting-edge AI chip innovations.

The company’s unique wafer-scale engine challenges traditional GPU designs, promising breakthroughs in speed and efficiency for AI workloads.

As AI demands surge, Cerebras positions itself as a formidable competitor that Nvidia and the industry cannot overlook in future computing advancements.

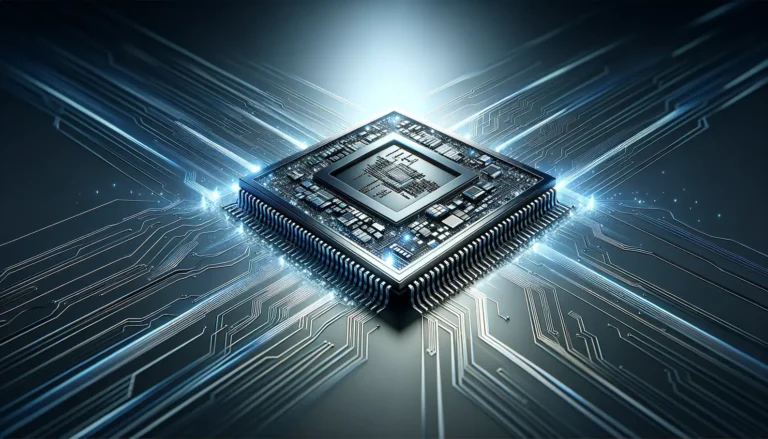

Cerebras’ Wafer-Scale Engine Technology

Cerebras Systems has raised $1 billion at a $23 billion valuation, reflecting strong confidence in its unique AI chip technology amid growing needs beyond Nvidia GPUs.

The investment will back advances in AI compute infrastructure to address rising demands that stress memory and computing resources globally.

Cerebras’ Wafer-Scale Engine (WSE) technology stands at the core of this innovation, redefining how AI compute power is delivered.

Architecture and Scale of WSE-3

The WSE-3 chip integrates nearly one million AI cores and 44 GB of ultra-fast on-chip SRAM on one massive 46,225 mm² wafer.

With 4 trillion transistors, this monolithic chip offers unprecedented scale, reducing latency and power use compared to multi-chip GPU clusters.

This wafer-scale integration yields up to 20 times faster training and inference speeds with superior energy efficiency for large AI models.

Key Differences from Nvidia GPUs

Unlike Nvidia’s GPUs, which rely on clusters of smaller chips linked by NVLink and external high-bandwidth memory, WSE-3 embeds memory on-chip to avoid bandwidth bottlenecks.

While Nvidia’s top GPUs offer higher raw compute performance, Cerebras delivers nearly twice the energy efficiency and much faster AI inference speeds on some tasks.

This single-chip design simplifies programming and cuts power consumption, positioning Cerebras as a compelling alternative for AI workloads demanding speed and efficiency.

AI Chip Market and Strategic Context in 2026

The AI chip market in 2026 is rapidly expanding, driven by soaring demand for high-performance computing in diverse sectors like healthcare and autonomous vehicles.

Investors eagerly back startups and established firms alike, fueling innovation and competition to meet the escalating AI workload needs worldwide.

This growth highlights strategic shifts as companies refine chip architectures to address challenges in power efficiency and computing scale.

Market Growth, Major Players, and Investment Trends

Major players such as Nvidia, Intel, and Cerebras dominate, while newer entrants attract significant funding to challenge established GPU-centric paradigms.

Investment trends favor AI-specific architectures optimized for large models, with funds allocated to technology breakthroughs and supply chain enhancements.

Market analysts predict continued consolidation along with fresh capital inflows to accelerate AI hardware advancements and broader adoption.

Impact of Memory Shortages and Supply Chain Dynamics

Memory shortages remain a critical bottleneck, affecting chip production timelines and driving up costs across AI hardware manufacturing.

Supply chain constraints, including wafer fabrication capacity, push chipmakers to innovate with integrated on-chip memory solutions for better resilience.

Cerebras’ wafer-scale design partially mitigates these issues by embedding large memory directly on the chip, reducing dependency on external components.

Expert Opinions and Competitive Landscape

Industry experts recognize Cerebras as a transformative force, challenging dominant GPU architectures with its wafer-scale approach to AI processing.

Analysts emphasize the firm’s role in pushing innovation, especially as AI models grow larger and demand more optimized compute solutions beyond traditional GPUs.

The competitive landscape is intensifying, with Cerebras positioned as a key disruptor alongside incumbent giants investing heavily in AI hardware.

Cerebras CEO’s Vision and Industry Positioning

Cerebras’ CEO envisions democratizing AI compute with hardware that drastically accelerates model training while lowering energy consumption.

This vision supports empowering researchers and enterprises to handle next-generation AI workloads more efficiently without compromising scale or speed.

By focusing on wafer-scale integration, the CEO aims to carve out a distinct niche that challenges reliance on conventional multi-chip GPU systems.

Competition from Intel, Broadcom, and Nvidia

Intel and Broadcom are ramping AI chip efforts, leveraging their manufacturing scale and IP portfolios to compete in specialized markets.

Nvidia remains the market leader but faces pressure as competitors like Cerebras innovate with alternative chip architectures and energy-efficient designs.

This rivalry drives a dynamic ecosystem where advances in compute density, memory integration, and software support are critical for differentiation.

Future Outlook and Broader Implications

Cerebras’ breakthrough wafer-scale technology signals a shift in AI hardware, potentially redefining how compute power scales with growing AI model sizes.

The company’s innovations could drive wider access to efficient AI hardware, lowering barriers for researchers and enterprises globally.

As AI computing demands rise, Cerebras’ approach may influence industry trends towards integrated, energy-conscious chip designs.

Potential IPO and AI Hardware Accessibility

Cerebras is expected to explore an IPO to expand its reach, enabling broader adoption of its wafer-scale AI chips across markets.

An IPO could fuel R&D investments, accelerating development of even larger and more powerful AI compute platforms.

This move aims to make high-performance AI hardware more accessible, democratizing AI model training and deployment at scale.

U.S. Tech Leadership Amid Global AI Competition

Maintaining U.S. leadership in AI hardware is critical as global rivals intensify competition in next-gen compute technologies.

Cerebras and peers help reinforce American innovation through cutting-edge design and domestic manufacturing strengths.

Such leadership may shape global AI strategies, emphasizing tech sovereignty and competitive advantage in the AI chip arena.